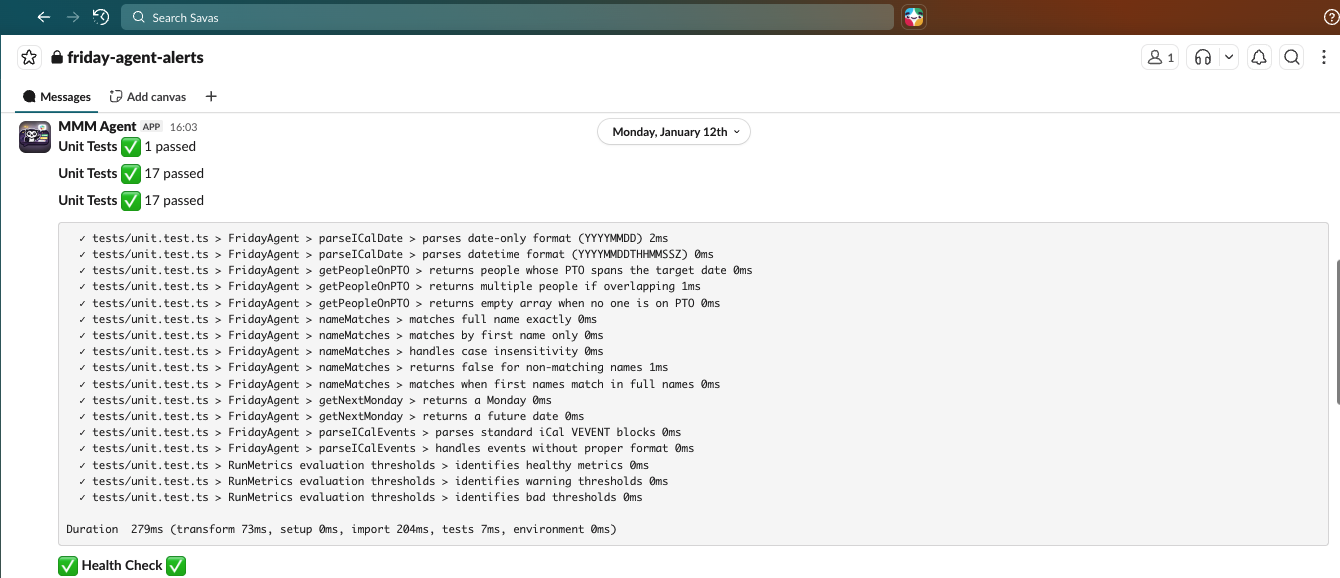

Even with passing unit tests, the randomization sometimes produced results that didn't feel 'fair' to the team, which is exactly the kind of edge case you want to catch in a low-stakes environment. Because results posted to Slack (meta), we spotted these quickly and refined the logic.

Taking Next Steps After Low-Stakes Familiarization

These ideas aren’t theoretical. They’re how we approach Practical AI at Savas Labs.

We intentionally start with low-stakes use cases to understand how agentic workflows behave in real environments. From there, we apply agents only where the payoff clearly outweighs the added complexity.

You can see how this philosophy shows up in some products we've begun to roll out in solutions we’re building at https://savaslabs.com/solutions.

A few examples:

Keeping Systems Healthy and Secure

CMS Patch Pilot

Security updates for platforms like Drupal and WordPress are essential, but applying them safely is time-consuming and risky if rushed.

This solution:

- Monitors for relevant security updates

- Applies patches in staging environments first

- Runs checks to catch regressions

- Prepares review-ready changes for human approval

Nothing is deployed automatically to production.

Outcome: Faster patch cycles, fewer surprises, and reduced operational load.

Here, the complexity is justified by risk reduction and operational value.

Improving Content Quality at Scale

Website Content Optimizer

Routine content audits are valuable, but often deprioritized.

This solution:

- Crawls your site and runs deterministic checks

- Uses AI judgment to assess clarity, tone, and alignment with goals

- Stages improvements for easy human review

Outcome: Higher-quality content without automatic publishing or loss of control.

Content Tag & Taxonomy Auditor

Disorganized taxonomies quietly degrade search and navigation.

This solution:

- Maps tag usage and redundancy

- Evaluates structure against best practices

- Suggests improvements for review before any changes

Outcome: Cleaner structure and better discoverability.

Reducing Operational Friction

Workflow Assistants

Some of the most valuable applications are also the least glamorous.

We’ve built agents to assist with:

- aggregating signals from recruiting applications

- invoice reconciliation

- document quality evaluation and submission workflow

- marketing email auto-unsubscriber

- trip planning

- financial reporting

These low-stakes workflows are often where teams first become comfortable with agentic systems, before applying them to higher-value processes.